Here's The Real Story Behind The Facial Recognition That You'll Encounter During Travel

Tension around the usage of AI in everyday life is clearly on the rise. From AI-driven algorithms that determine level of economic access to AI-powered facial recognition systems, the application of such emerging technology will be one of the biggest cultural narratives of our time. Whether what happens next will be a massive detriment or aide to society will be up to all of us to become not only more vocal but more thoughtful regarding goals and solutions.

Just this week alone, UCLA announced an end to its planned facial recognition implementation campus-wide due to extreme protest organized by digital rights group Fight for the Future and expanded by university students. While working under the guise of creating a safer campus, UCLA had been planning to use Amazon’s facial recognition software, Rekognition to which students argued that such usage would not only infringe upon privacy but that the system was consistently faulty.

Yet while this may be what some may see as a win against widespread usage of facial recognition software, the same week, London’s Metropolitan Police has announced that it will use live facial recognition technology to now aide in policing the city, despite consistent errors. As a matter of fact, in this country, 49% of people agree that facial recognition should be used in stores if it catches shoplifters.

Thus, between Clearview, Amazon and more, there seems to be just as much of a race to infuse society with technology as there is to stop it. The former of which just may be driven by the fact that by as soon as 2024, the global facial recognition market could generate $7 billion in revenue. Just the travel industry alone will be massive. By 2023, 97% of airports will roll out facial recognition technology, according to Reservations.com.

Yet extensive media properties are now being produced to educate more of the general public about such impending technology. Indeed, one of the most buzzed-about films at the recent Sundance Film Festival was that of “Coded Bias,” a film that hits most of us right where we live today in an era driven by data capture. The work is nothing short of a provocative and seamless look at issues around bias and flaws in everything from facial recognition to algorithms that decide our privacy in free movement, ability to obtain credit and much more.

Incredibly directed by TED fellow Shalini Kantayya, the film follows MIT Media Lab researcher Joy Buolamwini as she stumbles upon the fact that a particular application she wanted to utilize cannot be activated because it is only sensitive to fairer skin tones. Indeed, 35% of facial recognition errors happen when identifying dark-skinned women, compared to 1% for white males.

Buolamini, a darker-skinned African American then begins to investigate the overall facial recognition offerings from such companies as IBM, Microsoft, and Amazon only to uncover massive bias that leads to everything from misidentification of individuals in such programs law enforcement is using to so very much more.

She then intersects with equally brilliant mathematician Cathy O’Neil who is, simultaneously researching and uncovering massive bias in algorithms in general, and the two women eventually find themselves all the way onto Capitol Hill to testify before congressional members including Alexandria Ocasio-Cortez to encourage new legislation around this area.

Indeed, within the film Buolamwini says that data is destiny, and such a fact is exhibited even in a variety of situations captured in the film from that of a low-income housing building in Brownsville, NY where deep tensions between tenants of color who feel more than spied upon thanks to cameras and the facial recognition entrance that the landlord has implemented to the unjustified stop-and-search by a 14-year-old in London. And it’s certainly not the first time. Less than a year ago, a man sued for false arrest based on misidentification by a facial recognition platform.

As we all know, technology is neutral. It is the human intent behind it that either weaponizes it or drives greater peace. Indeed, the AI for good camp would want to create a larger seat at the table soon before the public completely shuns technology that holds great promise.

This is a game about data.

“Yet data is not necessarily truth," explains, Sandra Rodriguez, Ph.D. and lead artist of New Frontier's AI project Chomksy vs Chomksy: First Encounter which is a conversation on AI with an AI system that also premiered at Sundance’s 2020 New Frontier category. “Companies trying to create solutions for facial recognition systems should first consider why identifying a face is so important in the first place, and if it is so important, why leave it to machines that cannot accurately perform a task any human can.”

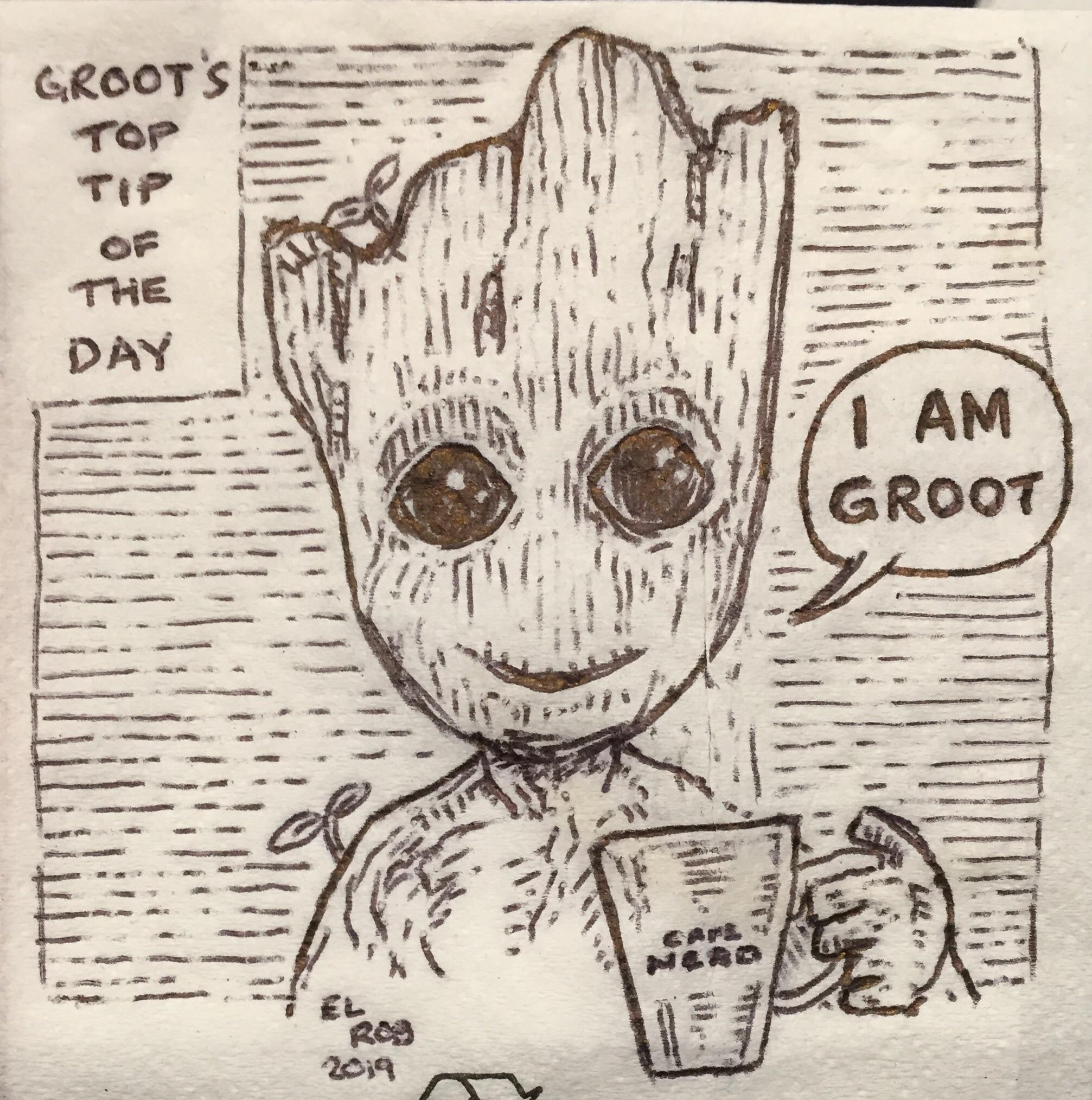

She continues, “Contrarily to humans, who can identify or recognizes faces even in abstract art, facial recognition does not, per se, recognize a face. It recognizes contrasts in light/dark in a certain pattern and spots and following a certain numbers of dots and shapes. Depending on systems, these can be 8 to 16 to 32, to 40ish symmetric dots it tries to find on an oblong contrast shape recognized as ‘face.’ These lines and dots, are what we humans could identify as eyes, nostrils, eyebrows, etc.”

According to Rodriguez, she put such a system to the test recently and was shocked by the results. “I drew cartoony faces with a pen on a napkin,” she explains. The facial recognition system would still detect it as a face and would try to identify a gender and age. Easy to fool a system.”

Thus, the major opportunity and competitive advantage lies not only in companies that can successfully create diverse teams to problem-solve but those that can also create both internal and standard ethics around such technology and lead the way in their adoption. Such considerations involve even what we think are small things such things a lighting issues right so that the applications are accurate.

Thus, the major opportunity and competitive advantage lies not only in companies that can successfully create diverse teams to problem-solve but those that can also create both internal and standard ethics around such technology and lead the way in their adoption. Such considerations involve even what we think are small things such things a lighting issues right so that the applications are accurate.

However, the largest opportunity just may be understanding the psycho-social concerns around such technology and being able adjust for them. How can opt-outs be created for those who are previously screened via a system similar to that of TSA periodically? How can safeguards and several-step authentication make for a system that is more accurate and trustworthy?

Yet “Coded Bias” also explores concerns around weighted algorithms driven by AI in terms of credit applications and more and such issues are clearly evident in the recent pushback concerning algorithms and the new Apple credit card from Goldman Sachs that showed a clear bias to female applicants. Even Apple co-founder Steve Wozniak reported than he received ten times more credit than his wife even though they have no separate assets.

Thus, neither your face nor wallet and economic lifestyle could be safe.

"Credit score is an extremely important indicator in terms of the American economy, which has one of the most credit-driven societies in the world," explains Peter Gloger, Senior Machine Learning Engineer at Netguru.

He continues, "The models used in assessing credit risk are based on linear relationships, that is, logistic regression. Let's assume the model determining it used the variable ‘client uses other credit cards.' In this case, the answer would turn from ‘No’ into a ‘Yes’ just like that. We can also assume the data the model was trained on had such examples and in most cases getting another card meant problems with repaying the loan for the original card. But that may not be the case in reality."

Gloger says that initially, machine learning algorithms were able to capture the complex interconnections between credit score indicators much more precisely than the more traditional methods but that they had one very important drawback. Most of them lacked intuitive “explainability” essential for the customers and regulators to trust in their judgment. "Currently, a lot of effort is being put into creating modern methods in a way that clearly gives insights on how the algorithm made its decision without compromising its accuracy and complexity. The sooner this gets adopted the better, for both banking institutions and their customers," explains Gloger.

However, Kareem Saleh, an EVP at ZestFinance, an AI software company for credit, and one who spent several years with the Obama Administration gives even deeper insight. "The people who make traditional credit scores are not trying to be biased against anyone, and they strive to be fair. But a seemingly objective model trained on historical data is going to reflect and exacerbate historic discrimination and bias."

For example, he says that the fact that certain groups have not historically had ready access to safe and responsible credit could incorrectly predict that people in those groups high credit risks.

Truly in an era of algorithms powered by deepening AI, credit application and even job-hunting seems a trickier labyrinth each day. “Coded Bias” also includes a shocking example of algorithms rejecting every single female applicant for a particular position based on previous patterns that exist.

According to a Reuters report, for example, “In effect, Amazon’s system taught itself that male candidates were preferable. It penalized resumes that included the word ‘women’s,’ as in ‘women’s chess club captain.’ And it downgraded graduates of two all-women’s colleges, according to people familiar with the matter. They did not specify the names of the schools.” Though the company has scraped this program, myriad others are working on perfecting the union of AI and resume selection. The demand is very present given a recent survey by Career Builder noted that 55 percent of U.S. human resource managers said that AI would be a critical part of their work within the next five years.

“We keep trying to ‘predict’ from patterns,” adds Rodriguez. “We keep trying to identify in our past acquisition of information which we call data, truths that can hold in the future. We disregard the context, culture and flaws of this acquisition of information. If the data pools are biased (and they always are, culturally, historically, contextually), then that biased is simply reinforced by systems trained to algorithmically find patterns and correlations.”

Certainly, such debates and narratives around this overall subject as it intersects with civil liberties will be one of the biggest of our time. Solving for this will take our best minds and a wide-breadth of minds committed to creating solutions that cover all types of concerns and lifestyles. Definitely no easy task but the need and the complexity in this part of the industry is ripe for those who want to create a new path that truly benefits society as much as it also drives business.

Adds Reid Blackman Ph.D. and n Ernst & Young Ethical Advisor on their AI Advisory Board, “It’s worth highlighting that this is a place where regulation is needed. Even Microsoft thinks that facial recognition technology needs to be regulated. But that aside, companies need to think very carefully about what they’re creating, how they will deploy it, and who will have access to it. There need to be engagements with stakeholders to see the ways in which their technologies could be used to harm people. There must be clear guidelines about who is allowed access to the technology, how are they allowed to use it, and with whom they are allowed to share it if anyone. Failing to do this well will result in a tremendous loss of trust, which will only hasten a level of overall regulation that those companies are desperate to avoid in the first place.”